The New TV Tech: What You Need to Know

The arrival of 4K/Ultra HDTV has introduced a whole new set of complications for shoppers to confront before they take the plunge. Many of the sets we’ve seen in 2015 sport curved screens, emerging display technologies, and the capabilities to handle the growing range of UHDTV sources, whether streamed or stored on forthcoming physical media. And those sources themselves sport a range of advanced features, not all of which can be handled by even the latest TVs.

Confused? Frightened? Put off? Don’t be. All you need to know can be broken down into a few key areas, which I will lay out for you below in detail. The truth is, no matter what their capabilities are, all the high-end UHDTVs that have come out in the past year will be backward-compatible with your existing gear (Blu-ray player, cable/satellite DVR, streamers, etc.). That said, having an understanding of what’s new, and what each feature brings to the table, will help you make a sound decision about which set to invest in.

Thrown a Curve

One recent, dramatic change in TVs is the use of a curved screen, as seen in lineups from, primarily, Samsung and LG. Buyers walking into a store and coming across curved screens among the rows of flat-screen models are bound to wonder, “Are curved sets better?”

The answer: No. Curved screens are part marketing gimmick pushed by TV makers to allow certain sets to stand out from the crowd, part one-upmanship between those two Korean companies. Not only will a curved screen do (essentially) nothing to improve picture quality, but it usually comes at a price premium over regular flat-screen models.

The main point that both Samsung and LG make in defense of the curve is that it creates a more immersive, cinematic viewing experience. There’s some legitimacy to that argument, but when a 55- or 65-inch curved screen (the most common) is viewed at a typical 8-to-9-foot seating distance, any potential for visual immersion disappears because the screen’s left and right edges fall way short of extending into your peripheral vision, as they would at a movie theater. The only current models with any potential to create an immersive experience are Samsung’s 88-inch and LG’s 105-inch curved-screen LCD Ultra HDTVs, and those cost $20,000 and $100,000, respectively.

Along with stirring up consumer confusion, curved TVs can actually degrade picture quality. The main problem: A slight bowed effect appears at the top and bottom of the picture when you watch ultra-wide letterboxed movies. Also, off-axis picture uniformity with LCD models can be worse than it would be with a flat-screen model, possibly resulting in heightened black levels and faded colors. They might also produce exaggerated reflections from room lights, depending on where you’re sitting. Meanwhile, curved screens are awkward (though not impossible) to mount on a wall. Bottom line: If the cosmetics of a curved panel speak to you, by all means, have at it. But don’t buy into the hype that it’ll do anything for image quality.

4K/Ultra HDTV

When you’re shopping for a new set today, perhaps the biggest decision you’ll have to make is whether to buy a regular HDTV or an Ultra HDTV. The most basic difference between the two is resolution: Whereas HDTV screens contain 1920 horizontal x 1080 vertical pixels, UHDTVs have a pixel grid that’s 3840 x 2160. That’s more than 8 million pixels—four times as many as with regular HDTVs. While those specs might seem impressive, in most cases, all those extra pixels don’t have a huge impact. Why? Blame the human visual system, which lacks sufficient processing power to let the eye distinguish UHDTV’s added detail at typical screen sizes (50 to 65 inches) viewed at typical seating distances. Where UHDTVs can have greater impact—and a rather dramatic one, at that—is in their ability to display images with a wider color gamut and High dynamic range (HDR). Let’s cover each one separately.

![]()

More, Better Color

The current HDTV system (broadcasts, Blu-ray, streamed video) uses the Rec. 709 color space. Actually, your eyes are capable of taking in a much wider range of color than Rec. 709 offers, which is why the color gamut for Ultra HDTV has been expanded to allow for the much wider P3 color space used for theatrical digital cinema. You’ll also likely hear or read about an even wider color space, ITU (or Rec.) 2020, discussed in conjunction with Ultra HD’s capabilities. But no consumer set we know of can currently display it, so don’t expect to see it in UHD sources any time soon.

Along with the size of the color space, which defines how wide the range of color goes, another critical specification for color is the bit depth. This determines how finely the TV can sparse the colors to make individual hues; more bits means finer gradations of color, which helps minimize the visible banding that can sometimes occur when the set has too few choices.

Our current HD system uses 8-bit color encoding for the red, green, and blue color channels that make up video images. Do the math, and you’ll find that your HDTV is capable of displaying up to 16.8 million possible colors. Seems like a lot, right? But Ultra HDTV is capable of 10-bit color encoding. When a video source with 10-bit color is displayed on a compatible TV, the end result is a potential 1 billion colors. While final specs for UHDTV broadcasting (ATSC 3.0) have yet to be hammered out, the specs for the forthcoming UHD Blu-ray format support 10-bit color, and both Netflix and Amazon have announced plans to deliver 4K content with an extended color range.

Unfortunately, not all Ultra HDTVs fully support both the P3 color gamut and 10-bit color, which is why you’ll need to do careful research when shopping for a new set. Here’s one thing to keep in mind: Displays that support 10-bit color are also compatible with another new TV development, High dynamic range.

More, Better Brightness

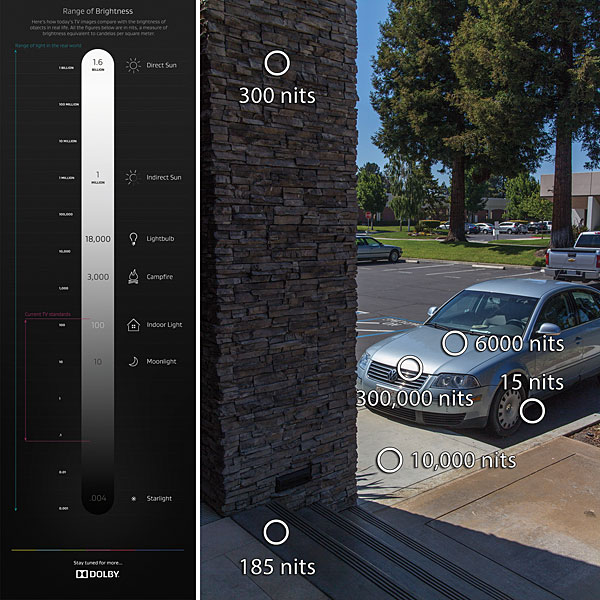

HDR refers to images with a brightness range beyond what’s usually contained in HDTV or UHDTV content. Blacks are deeper and have greater detail; peak whites are brighter and show more detail in highlights. Here’s how the process unfolds. HDR images are captured by new digital cinema cameras capable of a minimum 15 steps of dynamic range (film also

is capable of a wider dynamic range than is usually exploited). During post-production, the extended brightness information is converted to metadata that’s embedded alongside the Ultra HD video stream. At home, a set with a 10-bit display capable of reading the HDR metadata dynamically adjusts its picture to convey the extended brightness information.

Among the UHDTVs introduced in 2015 that are HDR compatible (meaning that they’ll recognize an HDR-encoded signal and make use of it) are Samsung’s JS9500 series LCDs and some of its other SUHD models, Sony’s X940C and X930C LCD sets (plus the X910C, X900C, and X850C via a forthcoming firmware upgrade that may be in place by the time you read this), LG’s EG9600 and EF9500 series OLED models, Panasonic’s TC-65CX850U LCD TV, and Vizio’s 65-inch and 120- inch ($130,000—ouch!) Reference series TVs. (Watch for our First Look at the Vizio RS120-B3.)