1080i vs. 1080p

The most frequently asked questions I've received this year have been about the difference between 1080i and 1080p. Many people felt—or others erroneously told them—that their brand-new 1080p TVs were actually 1080i, as that was the highest resolution they could accept on any input. I did a blog post on this topic and received excellent questions, which I followed up on. It is an important enough question—and one that creates a significant amount of confusion—that I felt I should address it here, as well.

There Is No Difference Between 1080p and 1080i

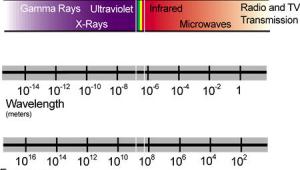

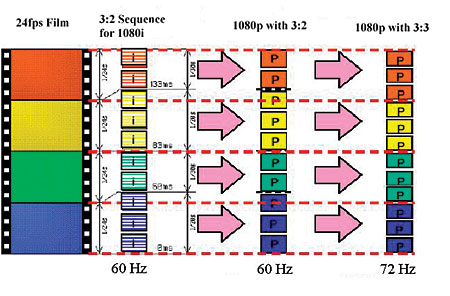

My bold-printed, big-lettered breaker above is a little sensationalistic, but, as far as movies are concerned, this is basically true. Here's why. Movies (and most TV shows) are shot at 24 frames per second (either on film or on 24-frame-per-second HD cameras). Every TV sold in the United States has a refresh rate of 60 hertz. This means that the screen refreshes 60 times per second. In order to display 24-frame-per-second content on a display that essentially shows 60 frames per second, you need to make up or create new frames. This is accomplished by a method called 3:2 pulldown (or, more accurately, 2:3 pulldown). It doubles the first frame of film, triples the second frame, doubles the third frame, and so on, creating a 2-3-2-3-2-3 sequence. (Check out Figure 1 for a more colorful depiction.) So, the new frames don't have new information; they are just duplicates of the original film frames. This process converts 24-frame-per-second film to be displayed on a 60-Hz display.

It's Deinterlacing, Not Scaling

HD DVD and Blu-ray content is 1080p/24. If your player outputs a 60-Hz signal (that is, one that your TV can display), the player is adding (creating) the 3:2 sequence. So, whether you output 1080i or 1080p, it is still inherently the same information. The only difference is in whether the player interlaces it and your TV deinterlaces it, or if the player just sends out the 1080p signal directly. If the TV correctly deinterlaces 1080i, then there should be no visible difference between deinterlaced 1080i and direct 1080p (even with that extra step). There is no new information—nor is there more resolution, as some people think. This is because, as you can see in Figure 1, there is no new information with the progressive signal. It's all based on the same original 24 frames per second.

In the case of Samsung's BD-P1000 Blu-ray player, the player interlaces the image and then deinterlaces it to create 1080p. So, you get that step regardless.

Two caveats: Other Blu-ray players can output 1080p/24. If your TV can accept 1080p/24, then it is adding the 3:2 sequence, unless it is one of the very few TVs that can change its refresh rate. Pioneer plasmas can change their refresh rate to 72 Hz, and they do a simple 3:3 pulldown (showing each film frame three times). This looks slightly less jerky.

If you're a gamer, then there is a difference, as 1080p/60 from a computer can be 60 different frames per second (instead of 24 different frames per second doubled and tripled, as with movie content). It is unlikely that native 1080p/60 content will ever be broadcast or distributed in wide numbers. The reasons for this are too numerous to get into here, but I list them in my follow-up blog.

So Don't Worry (Or Only Worry a Little)

Without question, it would be better if all TVs accepted a 1080p input. (Read that again before you start sending your e-mails.) What I hope this article points out is that, if you have a 1080p TV that only accepts 1080i, you're not missing any resolution from the Blu-ray or HD DVD source. If a TV doesn't correctly deinterlace 1080i, on the other hand. . .well, that's a different article (which is conveniently located on page 64).