Primary tabs

Are Blind Audio Comparisons Valuable?

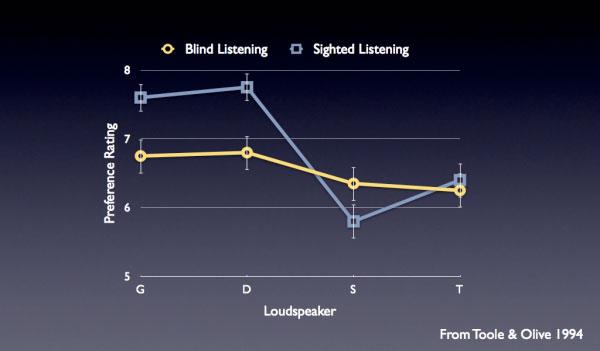

In this week's Home Theater Geeks podcast, Home Theater and Stereophile contributor Steve Guttenberg argues that blind comparisons of audio products are meaningless for several reasons. First of all, he claims, most people cannot reliably discern the difference between similarly performing products, and perhaps not even between products that perform quite differently. As you can see in the graph above, listening tests conducted by Floyd Toole and Sean Olive reveal that blind comparisons of four speakers resulted in much more equal preference ratings than the same comparisons in which the listeners knew what they were listening to.

Also, Guttenberg maintains that the tester's ears are psychophysiologically biased by the sound of one product while listening to the next product. Finally, the conditions under which the test is conducted are rarely the same as those in any given consumer's room, so the results mean nothing in terms of deciding what to buy.

Do you agree? Are blind comparisons of audio products valuable? On what do you base your position?

Vote to see the results and leave a comment about your choice.

Are Blind Audio Comparisons Valuable?

Yes, blind audio comparisons are valuable

86% (995 votes)

No, blind audio comparisons are not valuable

14% (168 votes)

Total votes: 1163