Ridding the World of Bad Sound Page 2

Third, when doing corrections of the impulse response, the above two points apply even more strongly. Impulse response correction is important because a loudspeaker measured in a room is non-minimum phase. That is, the conventional approach of using a minimum-phase filter will not suffice. But at the same time, impulse response correction must be done even more conservatively than frequency response correction, since our auditory system is very sensitive to, for example, pre-ringings, which are generated if a non-minimum phase filter is used and not perfectly tuned to the room/speaker. Therefore, our impulse response correction uses all measurements to determine which parts of the impulse response are common to all positions—and therefore likely to also be the same in positions not measured—and then try to optimize these aspects.

We patented this unique approach quite a few years back and subsequently wrote a number of articles on it. A working impulse response correction has a strong impact on staging, imaging, and clarity. Overall, all spatial attributes are improved beyond what a minimum-phase filter approach can achieve. It’s because we as listeners determine the positioning and spatial distinctness by the degree of similarity of the signals reaching our two ears. The impulse responses of individual speakers in a room differ significantly and thereby affect the similarity of the signals, hence destroying the intended sound image. In my own mind, staging and imaging are key to generating a convincing stereo (and of course surround) reproduction that sounds transparent and natural.

S&V: Are any new home audio/theater-related products or technical refinements on the horizon?

MJ: We are currently working on a number of improvements for the Dirac Live software, in addition to a few algorithm refinements. In fact, there will be a completely new Dirac Live calibration software coming out in a few months.

Even more exciting, 2017 will be the year that we finally showcase a Dirac Unison calibration tool for hi-fi use. Our challenge and our goal with this project has been to retain the performance benefits of Dirac Unison while simultaneously creating an intuitive, not-too-technical calibration tool. 2017 marks the year that we deliver on this goal, which we at Dirac feel constitutes a major milestone in a new generation of digital room-correction systems. While expectations for Dirac Unison are quite high, we’re confident that it will not disappoint!

S&V: At the CES 2017 you introduced the Dynamic 3D Audio AR/VR platform, which is designed to make audio for virtual reality applications more realistic. Tell us about it.

MJ: We showed the first example of our Dynamic 3D Audio AR/VR platform, the Dirac VR technology, which renders positional sound using head-tracking and headphones with a greater degree of accuracy than ever before. For example, our demo involved the audio rendering a helicopter hovering just yards in front of the participant. Regardless of which direction the participant’s head moved—up, down, left or right—he or she experienced zero change in position of the helicopter.

We also demonstrated how we can simulate a reference stereo listening environment over headphones, without requiring individual calibration of the listener. In VR, true immersion means that sound quality requirements are greater, and more important than in most other applications. Any acoustic inaccuracy immediately breaks the illusion of being elsewhere. Therefore, in order to create the virtual listening experience, we had to pay attention to every tiny detail of the technology to reproduce a sound every angle and distance from the listener.

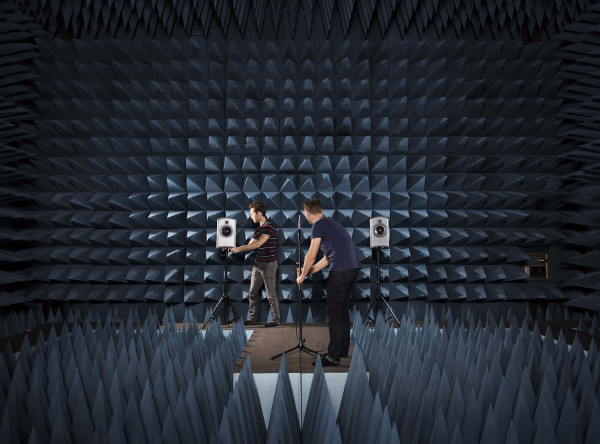

We pioneered a couple of concepts that were critical to achieving the high-end performance standards we know we were capable of. One is the Dynamic HRTF approach. HRTFs (Head-Related Transfer Functions) are required to adequately account for the coloration of an incoming sound that the head and torso imposes, and is instrumental for the positioning of the sound. However, existing HRTFs have flaws in several dimensions. First, they are static and don’t account for the dynamic movements of one’s head in relation to torso. We therefore invented dynamic HRTFs which, of course, had to be measured on a number of individuals. This was a huge challenge.

It was necessary to measure them with high angular resolution while also removing all sources of noise and errors. It is well known that existing HRTF databases suffer from significant inaccuracies and errors. From there, we then developed a universal set of dynamic RTFs that obviate the need for individual HRTFs. That was a great relief, and somewhat unexpected as current teachings are that for proper sound localization one needs individually-calibrated HRTFs. Just as with room correction, the trick is to not over-compensate, but rather use only the common traits of a population’s HRTFs.

We then leveraged our years of experience creating natural reverb from various venues and translating it to other venues. This is also needed when you want to render a sound scene transparently over headphones. There were a number of other details to be considered, and of course we needed to optimize the headphones themselves to make sure they introduce minimum coloration to the sound, as well.

In the end, the technology now allows us to re-create, for example, a high-end studio listening experience over headphones, which we demonstrated at CES. For home cinema enthusiasts, the technology allows us to render a surround sound or Atmos setup over headphones and still get the experience as if you were in a reference listening room. This has great potential for high-end headphone use.