UHD Blu-ray vs. HDMI: Let the Battle Begin Page 2

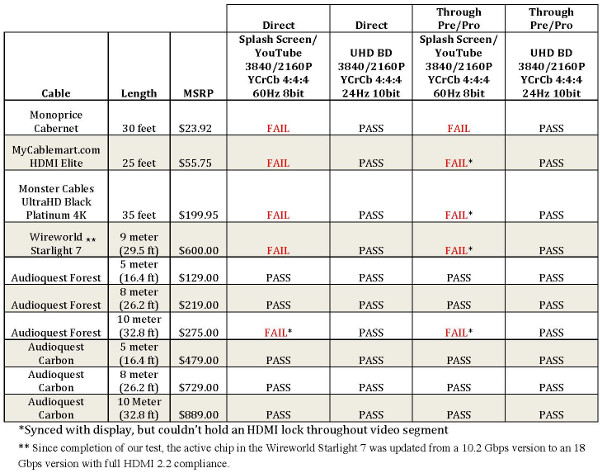

Through my testing, I discovered a couple of things. First, some cables hooked directly from the Samsung to the JVC projector would not sync. In each case, these were the active cables that have a transmitter on the source side and a receiver on the display side of the cable. But when these same cables were used through the Marantz pre/pro, they were at least able to lock on to the signal temporarily (with varying degrees of “sparkles” on the display and dropouts occurring during the YouTube 4K/60Hz video). This leads me to believe that the 5-volt output that the Samsung is spec'd to provide via its HDMI port may be underpowered, considering these cables did work when connected through the pre/pro. I don’t have the equipment necessary to test this theory, so it's still conjecture. But that's a logical conclusion.

Of all of the cables, I was particularly impressed with the Audioquest models for a number of reasons. First, the build quality is extremely impressive. They provide a snug fit in the HDMI port and both the Forest and Carbon cables were able to lock-in on a high-bandwidth signal at 8 meters (26 feet) length, which is very impressive. At 10 meters, the Forest was able to negotiate a handshake for an instant, but there were ample “sparkles” on the screen and it would lose connectivity every 60 seconds or so. That wasn’t the case with the 10 meter Carbon cable—it was able to sync and never lose it. Audioquest chalks this up to the fact that the Carbon uses 5 percent silver in and on its conductor (versus 0.5 percent on the Forest), and the added silver does make a difference. But silver isn’t cheap—which is why the Carbon cables cost more than three times the Forest cables at my tested lengths, peaking at $889 for the 10 meter size. That's more than twice the cost of Samsung's UHD Blu-ray player at its current $399 asking price. I guess you get what you pay for—in this case, it’s silver.

Can't Live With It, Can't Live...

In the end, I’ve come to the following conclusion: HDMI sucks. First, it’s a pain to pull through walls and conduit given its large connector. Second, it comes unplugged too easily. And third, it’s not very install friendly over longer lengths because the signal degrades rather substantially.

My testing highlights the worst case/most-demanding scenario for HDMI bandwidth with signals of 3840/2160P YCrCb 4:4:4 60Hz—which we really won’t see in commercially produced material for years to come, so I wouldn’t panic just yet. On the plus side, the rear of my pre/pro isn’t nearly as cluttered as it used to be—I’m not sure that’s a fair trade, though!

In closing, I’d like to point out that what I could be facing here is an EDID (Extended Display Information Data) issue specific to my projector that's rearing its ugly head. Samsung has told me that its player’s default output for its home splash screen is supposed to be 2160P/60Hz 4:2:0 8-bit, but the JVC may be requesting a 2160P/60Hz 4:4:4 8-bit signal in the handshaking process and the player is just honoring the request. I've contacted JVC about this as well, and they're looking into their projector's interaction with the new Samsung player. Before I go through the process of snaking a new (and potentially expensive) cable through my wall and ceiling, I’m going to wait a bit and hope that firmware updates from JVC and Samsung will resolve this issue. If not, well, competitive players are said to be due this year from several other manufacturers. Let the UHD Blu-ray upgrade cycle begin!

Update: Following posting of this story, we were contacted by David Salz, president and founder of Wireworld, saying that the company has recently changed the active chip in the Starlight 7 cable used for this test from the older 10.2 Gbps version to a new 18 Gbps version with full HDMI 2.2 capability.