1080p vs. 720p Displays

Chris Keczkemethy

Actually, I make this very point every time I talk about the difference between 720p and 1080p displays. It's true that you can't see any difference in detail if you're farther than a certain distance from a screen of given size, but scaling artifacts could be very visible. And you're exactly right that most HD channels are broadcast at 1080i (1920x1080), so they must be scaled to 1280x720 or 1366x768 (the actual resolution of some so-called "720p" TVs) if viewed on such a set. Of course, the opposite is true if you watch a 720p channel such as ABC, Fox, or ESPN on a 1080p display, so the signal is sometimes going to be scaled one way or the other no matter what the resolution of your display.

You are also entirely correct that the real-world impact depends on the incoming signal and the quality of the scaler that's doing the job. Nothing can be done about the quality of the incoming signal, but there are typically several devices in the signal chain that can scale the signal, and you can determine which one does the best job.

To see if the cable/satellite box, A/V receiver, or TV does the best job, start by setting the output resolution of the cable/satellite box to that of the display (720p or 1080i). Most cable/satellite boxes cannot output 1080p, so if you have a 1080p display, something else in the signal chain will have to deinterlace the signal. Also, be sure that overscanning is disabled in the display, because this can cause its own artifacts.

If the signal passes through an AVR, set it to pass-through mode, so it does no video processing. Then, select an HD channel that broadcasts at a resolution other than your displaythat is, if your display is 1080p, select ABC, Fox, or ESPN; if it's 720p, select CBS, NBC, or another 1080i channeland watch for a few minutes. If your cable/satellite box includes a DVR, record a few minutes of material so you can watch the same content in the next two tests.

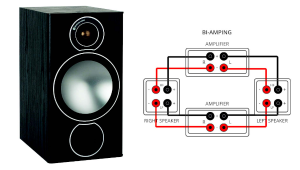

Next, set the cable/satellite box to output the native resolution of whatever channel it's tuned to, which prevents it from doing any scaling. Set the AVR to output the same resolution as your display, which means it will do the scaling, and watch the same channel or, even better, the same recorded content as before. If you have a 1080p display, also watch a 1080i channel in this configuration to see how well the AVR deinterlaces 1080i.

Finally, set the cable/satellite box to output each channel's native resolution and the AVR to pass-through mode and watch the same two channels or clips as before. In this configuration, the TV is doing the scaling and, if necessary, the deinterlacing.

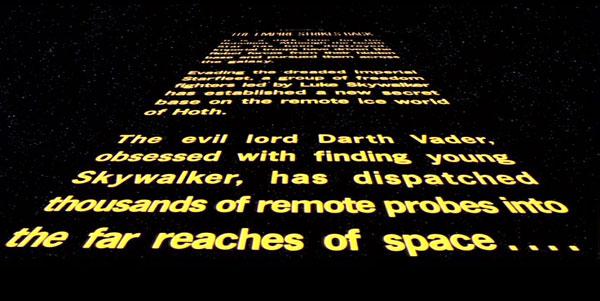

Of the three configurations, which one exhibits the fewest scaling artifacts? These artifacts can include softness and jaggies along well-defined diagonal edges. What about deinterlacing artifacts, such as jaggies and moiré distortion in areas of fine detail? Scrolling text, such as at the beginning of the Star Wars movies, will look jittery and jagged if the scaling is poor. Whichever configuration works best is the one you should use to watch TV.

If you have an A/V question, please send it to askhometheater@gmail.com.