What HDR Means for Color and Luminance

In our pre-HDR lives we were limited to home video program sources that were digitally graded (produced) for a peak white level (luminance) of around 100 nits, or about 29.2 fL. While the camera images captured in the production of a source such as a motion picture were and are capable of far higher peak levels (either on film or video), up to now we’ve been tied to 100 nits thanks to standards established decades ago by the limitations of motion picture projectors and CRT televisions.

Ironically, even the best commercial cinemas, until recently, were lucky to reach even 14 fL. And although home video displays, particularly flat screen sets, have long been capable of more than 100 nits peak output, because of the way the sources were produced most professional home calibrators have aimed for reference levels of 30-35 fL for dim- or dark-room viewing (with a possible bright room settings saved in another picture memory). Any change in the presentation luminance from the grading luminance could result in something different from what the colorist (and perhaps the filmmaker) saw in the mastering room. But unless the home viewer cranked the contrast and backlight levels to stun, or the theater manager insisted on using the projection lamp well beyond its sell-by date (which happened more than you might expect—theatrical projection lamps were and are extremely expensive), the alterations produced in the image by differences in production and playback luminance levels were too small to matter much to the viewer.

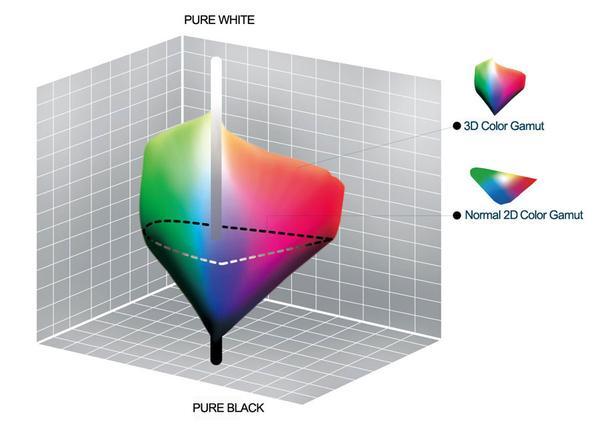

While high dynamic range (HDR) involves far more than just higher peak luminance levels, the above discussion can change significantly when we’re talking about peak luminance values 10-times higher than we’re become accustomed to at home. Projectors aren’t yet capable of such brightness increases (even Dolby Cinema, which offers a limited version of high dynamic range, tops out at around 30 fL or 100 nits.) But flat screen HDR sets can now reach between 500 and 1000 nits, depending on the technology (LCDs can reach the top of this range, OLEDs are generally limited to around 500 nits). Because of this, we’re now starting to see the concept of a 2D color gamut replaced by a 3D “color volume.”

The conventional way we represent a color gamut, the CIE chart, is a two dimensional representation of what is actually a three-dimensional spacethe color volume. An example of the latter is shown at the top of this blog, with the slice shown as a dotted line representing the conventional CIE chart. The third dimension here is luminance. Note that as the luminance gets brighter, the colors eventually coalesce to white. As they get dimmer, they fade into black. This is consistent with the way the human visual system works, and both the CIE and color space charts are based on the characteristics of human vision. We’ll never know what ET saw when he first saw television, but it’s unlikely to have been what we see.

The actual color volume available, however, will vary with the display. The volume we’ll likely be dealing with in the immediate future, and for the next few years, is called P3, and is the same gamut (though with a different white point) as the color available in digital cinema. You’ll likely be hearing chatter (if you haven’t already) about another, even wider color gamut, BT.2020. But no currently available consumer displays can go beyond P3 (and some can’t yet even make it that far).

When an HDR UHD set receives an HDR UHD source, metadata attached to the source file tells the set the range of color (and peak luminance) at which the source was mastered. The set then uses this metadata to “tone map” the source to fit its (likely more limited) capabilities.

But there are potential pitfalls in this, and both the source and the set must do their jobs correctly to perform this mapping. The set might be designed to be creative with the way it handles the metadataperhaps but tweaking the set’s response to the metadata in a way that looks “better” to the manufacturer, or will (the maker believes) pop on the showroom floor and therefore sell better (of course we know manufacturers have never done this in the past!). The result could be very different than was intended by the producer of the source. We’ve already seen HDR UHD images in manufacturer’s presentations that look more like cartoons than real life. Calibration might help with this, though at present the tools and methodology needed for a proper HDR calibrations remain very much a work in progress.

In addition to color, the set’s tone mapping must accommodate the fact that the sources will often be graded for a higher peak luminance than the set can reproduce. The best pro monitors can produce up to 4000 nits, but consumer displays can’t as yet get nearly that bright. The source metadata, working with the set, must compensate for this.

And at the other end of the chain, film studios have Lottabytes of computer files storing the digital intermediates (DIs) of films that were mastered at less than a true 4K resolution. So far, these are generally being transferred to 4K by simple upconversion. There’s been a lot of flack on various Internet forums about this, but so far it’s the least of our concerns, since the advantage of 4K over 2K is hard to spot on consumer-sized displays.

But what about luminance? Those archived studio files were likely graded for 100 nits, or standard dynamic range. When Joe Bigshot, the studio’s head of home video, requests an HDR UHD Blu-ray release of Star Wars: The Wrath of Kermit, do you think they’re going to go back to the original elements (assuming that they still exist) and work up a whole new DI graded at 1000 nits? Or are they going to take the 100 nit DI and upconvert it to “HDR” at 1000 nits peak luminance? And yes, there’s now technology available to do just that. While we might prefer that the transfer be redone at 1000 nits from scratch, that may only happen with a few prime catalog titles, perhaps those also overdue for a full remastering and re-release.

While this HDR upconversion of an SDR DI, combined with its 2K upconversion to 4K, might well send videophiles out into the streets with torches and pitchforks (shades of 3D upconversions, or high res audio releases generated from upconverting a CD-quality file), this process won’t necessarily be the end of civilization as we know it. The result, as always, will depend on the caliber of the original file and how well the colorist does his or her job in performing the conversions. New titles, graded from the get-go at 4K and 1000 nits, should be immune to both of these issues. But as in the early days of any new format, we’ll likely see releases that look worse than their Blu-ray releasesin spite of 4K and HDR. But we’ll also see many outstanding ones.