TV Tech Explained: Mind Your Gamma

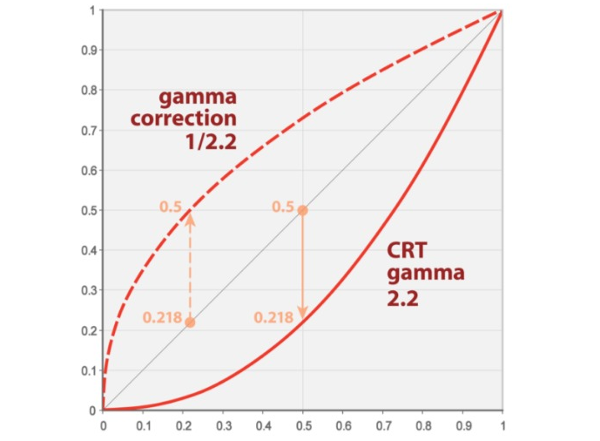

In an ideal world the brightness of the image on a video display will be directly proportional to the input signal. That is, if you plot a graph with the percent of total luminance in the signal on the bottom (x-axis) against the luminance actually achieved by the set, it would be a straight diagonal line: 20% of the signal produces 20% of the total luminance, 40% of the signal produces 40% of the total luminance, and so forth. Simple, no?

Well, it's not exactly that simple. The CRT (cathode ray tube) technology available when television was first developed was anything but linear. It was very insensitive at the dark end of the range and more sensitive at higher levels. To get a linear response an inverse correction was performed on the source material. As shown in the opening figure , the goal was the straight thin line in the middle. We refer to this as gamma, but the more accurate term, at the display side, is gamma correction. Notice from the graph that gamma affects the broad middle center of the brightness range. That is, it doesn't alter either the full black or peak white level of the image. Or at least if done properly it shouldn't!

So why, with today's solid-state technology does this odd correction persists when it isn't really needed? Simple: over 75 years of content produced with that gamma, not to mention hundreds of millions of dollars in studio and consumer gear designed to work with it.

Gamma in conventional SD and HD sources has been typically referred to as a number, such as 1.8, 2.0, 2.2, and 2.4. Where these numbers come from requires a mathematical depth we don't need to dive into here. But basically the higher the number the darker the perceived image.

Until recently there was no fully agreed-upon level for gamma, nor did gamma specify the actual peak luminance required of the image. The content producers and the public were free to choose assuming that the TV offered gamma control. Typically, however, the most appropriate values were 2.2 or 2.4, depending on the program material and the viewing environment (a lower gamma number typically works best in a brightly lit room). But some TV makers go their own way when labeling their gamma control. Sony, for example, offers gamma options numbered from Minimum (-3) to Maximum (+3).

But in 2011 the industry adopted a new gamma standard: BT.1886. It's similar to a gamma of 2.4, but offers better performance at the darkest end of the brightness range. It's adoption is growing, but it's not offered as a specific selection on most HDTVs. In that case I generally recommend a setting of 2.2 or 2.4 (I'd love to see an additional option of 2.3 yes, I'm that fussy instead of the almost useless 1.8 often provided).

The three photos shown below were created using photo editing software to adjust the overall brightness and not just the gamma but they convey the concept.

But in 2011 the industry was also contemplating the next big step forward for the video marketplace, hopefully one with more staying power than 3D. That step, of course, was high dynamic range (HDR), which was likely already in heavy development at the time in the inner bowels of the industry's research labs.

The move HDR appeared to be a good time for a break in the decades-long way of doing gamma, at least for HDR sources. The powers that be settled on the term EOTF, for Electro Optical Transfer Function, to describe the relation between a source's electrical footprint and visible and measurable optical image that results from it. A more intelligible term, to be sure, but confusing nonetheless. The term EOTF can actually be used for either SDR or HDR, but it's referenced more often for HDR. For SDR, the word gamma persists. Tradition (cue Fiddler on the Roof).

The HDR format was developed with a peak brightness goal of 10,000 nits. No consumer display can come even close to that. No professional monitor can do it either. Most Ultra HD HDR program material is mastered at lower levels, typically 1000 nits or 4000 nits. But to even play that without visible white clipping requires some clever processing tricks and a new term: Progressive Quantization. But that's a subject for next time.