Why A/B Testing Is Harder Than You Think

Take speakers, for example. The simple act of equalizing their sound levels is touchy. Most speakers aren’t flat, so even if you check their outputs with a sound level meter it’s hard to account for this fundamental fact. In those Stereophile tests we used so-called B-weighted pink noise for level matching. I was never entirely convinced that this was the ultimate answer, but it was the best one we had at the timeand perhaps still is.

In those shootouts we didn’t attempt to A/B the speakers side-by-side. The presence of one speaker directly adjacent to another can affect their dispersion patterns, and therefore arguably their sound. And apart from that they’d also be in slightly different locations. So we typically auditioned one stereo set of speakers at a time. Each pair was physically moved out of the room after their turn in the hot seats. The disadvantage to that approach was audio memory; it took at least five minutes to make each switch. We did listen in the blind by placing acoustically transparent grille cloth in front of each speaker so they couldn’t be visually identified. And the tests were single blind and not double blindthat is, the person running the tests (me) knew the identity of each speaker as it played, but the participants did not.

Other variables involved the selection of source material, the acoustics of the room (the same for each speaker, of course, but that still risked the room’s character unavoidably favoring some candidates over others), the height of each listener’s ears relative to the optimum acoustic height for each speaker, the test materials and the playback levels (neither of which will ever satisfy all listeners) and the effect of the acoustically transparent screen hiding the speakers. No such scrim is fully transparent acoustically, but the speaker’s own grilles were always removed to avoid a double layer of cloth).

It was a wonder that we did arrive at what I believe were reasonable results, though with a number of reviewers/listeners involved the conclusions were always hard to quantify. While we did two or three such tests, we didn’t continue them. They were both difficult and expensive to runwe had to fly in four or five reviewers. And the varied seating positions of the listeners for these two-channel speaker tests inevitably affected the results. In our last such test we avoided that variable by running individual sessions for each participant. This was better, but dramatically slowed down the test.

The Video A/B

On the video side, the most well known video shootouts today are those conducted annually by Value Electronics in the New York area. I’ve never participated in one of those, so nothing I say here should be assumed to apply to them. But I’m sure that the people who set up and run these tests are quite familiar with the difficulties involved.

Video comparisons are slightly less complex than speaker shootouts. But while I’ve set up a number of these shootouts involving several sets in a fully darkened room, and masked them sufficiently to hide their identities, it wasn’t always possible to completely conceal what they were. In one such test some years back, in which I was a participant, I could at least categorize all the entries as either a plasma or LCD by their off-axis performance. Plasmas invariably won such shootouts when plasmas were still king.

Beware of group tests in which each model under evaluation is set to its factory Standard Picture Mode. Comparisons run by manufacturers are often conducted this way, and for good reason. If a manufacturer adjusts the controls of their competition in any way they could be accused of skewing the test. But Standard mode is misnamed; it doesn’t follow any established standard, since there’s no such thing for preset Picture Modes. Rather it’s each manufacturer’s idea of which assortment of settings will please the most people most of the time. And that’s rarely an accurate setup! Standard modes, for that reason, will invariably look very different from manufacturer to manufacturer, and sometimes even from set to set within a single manufacturer’s product line. An independent comparison should always employ an experienced calibrator to adjust each set for optimum, accurate performance.

Today my comparisons of flat screen sets aren’t blind, are more informal, and usually involve me flying solo. But I know what to look for, and what setup parameters are needed to insure a fair comparison. When I conduct an A/B for one of my own reviews (assuming suitable challengers for the set under review are at hand) the color calibration settings are generally left as they were in the non-A/B viewing sessions. Using test patterns I them adjust the two sets for as close a match as possible in their peak white and black levels. If the match isn’t completely successful, I might make some minor tweaks to one or both sets, but only rarely more than one or two steps on the relevant controls.

The point in trying to match the look of the two sets is to make any inherent differences between them easier to spot. It's often been my experience, for example, that one click up or down from the optimum, test pattern derived setting on the Brightness control can significantly enhance or degrade a set's black and shadow detail performance on real world source material. I’m more critical of grayed-out blacks than subtle black crush, so I tend to favor the latter if the change is only one step down on Brightness and the effect on normal program material is otherwise benign. At that point it’s a judgment call. And if I offer one set that benefit, I dial in a similar change in the other set as wellagain assuming the result isn’t negative.

Aligning the peak whites is also critical, and for that I use a precision light meter. If, for example, you’re comparing an OLED display to an LCD (or a plasma, back in the day!), the peak white setting should be checked on a source (such as a window test pattern) offering a bright, but not screen-filling, white area. LCDs can go far brighter than OLEDs, so you must keep that in mind (and I try to point it out in my report if it’s obvious on normal program material). You also need to be aware that the gamma setting (particularly for standard dynamic range material) might also need tweaking to match the mid-tone brightness levels of the two sets. But this can never be perfect as the gamma curves offered in two different sets are rarely identical.

Parallels in Audio and Video A/B Testing

The parallels here with a loudspeaker comparison are striking. In a loudspeaker the maximum loudness level corresponds to the peak output of a display. And much as frequency response deviations make it tricky to match the subjective levels of two speakers under test, the same holds true for a video display’s gamma.

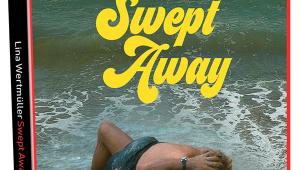

You don't have to be an eagle-eyed viewer to spot color differences in the two sets shown side-by-side in the above photo. In reality, however, the differences ranged from ignorable to invisible. Why the difference? I can only speculate that the color in the right set interacted oddly with the pixels in the camera used to take the shot. Not a good sales pitch for screen shots!