Doesn't Dolby Vision require a 10-bit panel to work its magic? If so, I would think that the Vizio, LG, and Sony displays that employ Dolby Vision could reasonably be assumed to be 10-bit.

Can My TV Display 10-Bit Color?

Q How can I tell if a TV has a 10-bit panel to process the full 10-bit color of high dynamic range (HDR) video? I’ve heard that certain TVs accept 10-bit signals, but display them at 8-bit resolution. I’m interested in buying Samsung’s 2016 KS9810 SUHD TV, a top-of-the-line model from 2016, but want to make sure it has 10-bit display. How will I be able to tell the difference?—Jerry Peterson

A The bit-depth of a TV’s display panel is a spec you need to rely on the manufacturer to provide. Unfortunately, that information is not always available on a company’s website. While many new HDR-compatible TVs, especially higher-end models, are 10-bit, some use 8-bit displays. Still others may process video at 8-bits and then use “dithering” to simulate the smooth appearance of 10-bit video shown on a 10-bit display.

What’s important to keep in mind is that a 10-bit display is only a benefit when viewing HDR content such as movies on 4K Ultra HD Blu-ray. That’s because HDR video is stored with 10-bit color depth, where 10 bits are used to encode the red, green, and blue color components for each pixel in the image. This results in a range of 1.07 billion possible colors as opposed to the 16.8 million colors delivered by the 8-bit encoding used for regular HDTV. What are the visible benefits to 10-bit color? The key one is an elimination of color “banding” artifacts: images have a smoother look that’s evident in shots with flat areas of color, such as an open sky or blank wall. The same images viewed on an 8-bit display will likely show a coarser range of color transitions.

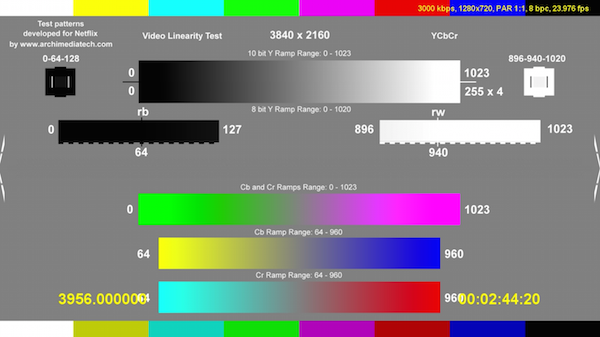

The good news for you is that Samsung lists the KS9810 as a having “10-bit support,” so you’ll presumably be safe when buying that model. How will you be able to tell the difference? Stream this test from Netflix. (You can call it up by searching for “test patterns” in the Netflix app.) If you see banding in the area of the grayscale strip designated as 10-bit, then the set has an 8-bit display. If it looks smooth, then the display is most likely 10-bit. I say “most likely” because it isn’t a foolproof test — the set may still be applying dithering processing to create the smooth effect of true 10-bit color.

- Log in or register to post comments

didn't know some tv sets might use dithering to make 8-bit looks like 10-bit. now i know.