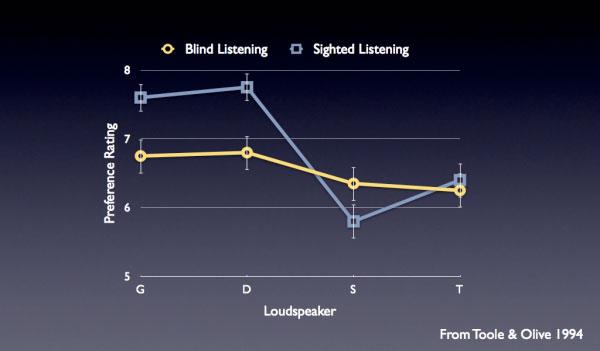

The conclusion I draw from that graph is that there were 4 speakers that were sonically similar. When there was less information to bias the test subjects (they couldn't see them) they were rated similarly. When the subjects we able to see them, they let visual cues change their perception of how they "should" sound. In this case Mr. Guttenberg is drawing an inaccurate conclusion.

Primary tabs

Are Blind Audio Comparisons Valuable?

Also, Guttenberg maintains that the tester's ears are psychophysiologically biased by the sound of one product while listening to the next product. Finally, the conditions under which the test is conducted are rarely the same as those in any given consumer's room, so the results mean nothing in terms of deciding what to buy.

Do you agree? Are blind comparisons of audio products valuable? On what do you base your position?

Vote to see the results and leave a comment about your choice.

- Log in or register to post comments

The test is described here:

http://seanolive.blogspot.com/2009/04/dishonesty-of-sighted-audio-produc...

"The sighted tests produced a significant increase in preference ratings for the larger, more expensive loudspeakers G and D."

What I mean by letting visual cues alter one's perception is that one will expect to hear certain things based upon what they see. For example I'd expect a speaker with three 6.5 inch woofers to have more authoritative bass than a stand mount monitor with a single 6.5 inch driver. This may or may not be the case, however I'm quite sure it is how I would perceive it.

They can be valuable as a tool to educate yourself, but, let's face it, you will be looking at them every day, so your biases will be present every time you hear them. There are a lot factors in what you "see"... physical size, fit, finish, the name on the badge, driver types, driver array, the credit card bill from your purchase, etc etc

I found Steve's remarks about blind testing in the podcast to be bizarre. Maybe that's why you're posting this here, Scott?

I had to go back and listen again. He says: "If you really believe in blind tests, your job as a consumer is pretty easy, because you can buy pretty much any piece of crap and you'll be perfectly happy, because in a blind test you wouldn't be able to tell the difference between that and something better." He seems to be saying that in blind tests all the differences that we hear in a sighted test disappear. Why would that be? It could be that listening to A then B colors the sound of B. It could be the stress of taking the test, or not having enough time, etc, etc. Or it could be that those differences were never there in the first place. How are we going to know?

I wish more reviewers would do blind testing. Not all the time, just occasionally. Doesn't have to be double blind; single blind is fine. When they've demonstrated that they can hear differences blind, people can have more faith in their sighted reviews. But they won't. Why? Because if they fail the blind test then their credibility is gone.

Most people would agree that there are significant difference in speakers, but many people claim that all amps sound the same, and they roll their eyes at the suggestion that cables sound different. I don't know one way or the other, but I'd like to find out. But how are we ever going to know, if all we have to go on are sighted tests?

I've always found this article interesting about speaker cables: http://www.roger-russell.com/wire/wire.htm

Frankly, I'm not a believer of the "exotic and expensive" cable theory.

Rationally, I disagree with Steve, except I'm here reading this page, on this website because I believe that doing so may further my enjoyment of hifi (or perhaps even of music, itself). If I really, really, disagreed with Steve I expect I'd be listening to some crap, probably perfectly happy with what I was listening to and oblivious to any of the minutiae that engage us all.

I dare anyone reading or commenting on this article to say otherwise.

Me: "Otherwise".

But I can't really back it up because your point is rock solid I think, if I get your meaning.

I find it fascinating that this subject is endless, in spite of the evolution of sound and its so called technology, that purest's resist so dearly.

Is Steve's argument solid, or is it that low self esteem buyers need to have the best to truly enjoy the so called best sound? If that is the case, they are fortunate to have many options to lift them to new sonic heights. I can see Steve's point of view maybe, as a seller of high end goods, he should know this well. I don't know, I love what I have (extremely cost effective), but would love to have some very high end stuff also, so maybe not? Possibly it is the new shinny factor?

I have demo'd many systems, and I always close my eyes and it seems very different from when my eyes are open, and I can not explain why this is. I imagine working at a high end store you would get a much better feel for what you like and dislike. It also doesn't help that there is no trust in this business either.

Having listened to your Pod cast Scott with Steve I found it quite interesting and fun in a geeky way.

Typically many would think that a properly controlled double blind ABX test should be free of bias from a listeners POV. But I think some of what Mr. Guttenberg said has some merit. ABX listening tests are scientific by design but they attribute that the human listening style and brain operates much like a machine may. I generally thought that ABXing really helped clear out bias but I think the way we listen and the way our brains may interpret the audio cues it receives can still be biased and subjective even in an ABX test. If I may gave a basis for my thoughts?

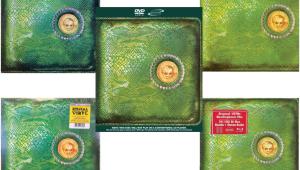

Recently before this podcast I was messing around with a cheap old Pioneer CD player made in the late 80's I bought from a local thrift store. I cleaned it up and connected it to my audio system. I put what I feel is a CD from Bruce Cockburn, "Slice O' Life" two disc set, BTW one beautifully recorded set with superlative Cockburn lyrics and guitar playing. I played "World of Wonders", listening intently and felt that "Meh a plain jane sorta flat sounding Made In Japan CD player with standard 16 bit DAC from the late 80's." I then after playing through most of the track, maybe 2.5 minutes of it, stopped it. Then I put the disc in another vintage player I already had sitting in my system, an Audio Dynamics machine also from the late 80's. A machine that is rather rare and sounds pretty decent, a warmer laid back sound I'd say. Playing "World of Wonders" again I found it sounded clearly superior to the Pioneer. Being CD players, and rather standard output voltages it's easy to have volume remain steady so as not to influence with a bias. I was not surprised at this result. Next, I put the disc in my defacto standard player for CD's, my Oppo BDP-83 and its connected for CD playback via its analogue outs using the then TOTL Cirris Logic DAC's. "World of Wonders" was immediately more open and clean, lots of details in the sound stage, less warm than the Audio Dynamics machine but superior to both vintage machines. It was IMO easy to hear it from the previous two machines. Modern DAC's and processing surely aids in this regard.

I know what you all are thinking. "Jeez man whats your point?" LOL.

I decided to repeat in the same order the tests, listening to about 2.5 min of World of Wonders. I still heard a difference but I did notice that the audible differences were not quite as wide as the first go round. Hmm, tried a third go round in order, same song same section length in time. During the third time the audible differences between the three machine all but disappeared. I found it hard to playing one machine right after another to hear much a difference now. WHAT THE H*LL!?!

Then a few days later I listened to Scott's podcast with Steve. I got to thinking about the subjective and objective. Though my test was not ABX, it was volume balanced, same track repeated in succession of order on three different machines. The first go round easily could separate the sound good and bad between the three CD players but by the third time not so easy. I figured maybe its because hearing the same music in a row even on three different machines our brain tries to maybe save effort, calories, what have you by just using the familiarity of the previous audio cues to balance out the perceptions found. I can't figure why by the third go round the easy to hear differences became hard to hear. Maybe ABXing does similar?

We are not computers, our brains work differently and the idea that a better measuring piece of gear should sound better to us is maybe wrong. Steve made certain examples of such on Scott's show. If we enjoy the sound, fidelity etc. of a piece of gear even if its by measure a poorer machine, it does not matter what the measure is because we find it sounds better to us regardless. I have many an LP that sounds better in my system, in my room, to my ears than the CD. The LP's sound more real, synergistic, immersive than the CD counterparts. Not, all LP's sound great and not all have a real wide gap between them and the CD but most often the LP's sound better and it does not matter what the specs measured are.

I suggest maybe a better test would be to review components on a volume balanced system (VERY IMPORTANT), it can be visually blind or not. Play no more than 1 minute of a sound track. The listener should carefully listen and then make notes as to what they hear, like and dislike. STOP the track. Cue up the other piece of gear and repeat the track only for 1 minute. The listener again should carefully listen and take new notes as to the sound and their likes, dislikes. Stop again after 1 minute. If the test needs to be repeated change to a new song or sound track and run another 1 minute maximum session repeating the note taking. IMO maybe this will reveal more appropriate human response between multiple pieces of gear and or software reviewed.

Meh, something to consider.

Anyone who has actually sold speakers can tell you that blind listening comparisons are less than worthless. It is hard enough getting some customers to hear ANY difference in some situations!

A LOT of the comparison really has to do with

1: How practiced the customer is at critical listening.

2: What biases that customer already has in his or her head

3: What music is used to demo the speakers (side rant: Why can't customers EVER bring in music they are familiar with when shopping???)

And finally the big one...4: How much value (or lack thereof) they place in a sound system

Speaker purchases are VERY personal. I have a brother who would have a hard time spending $200 on a pair of speakers, and I personally wouldn't spend LESS than $1000! Some people value bass performance and efficiency, while others prefer smooth vocals and a warm midrange. Some people want to reproduce what they hear at a club or rock concert...others are looking to reproduce every nuance of an orchestra.

I would often try to do somewhat blind tests (having customers close their eyes) to eliminate these biases, but guess what? The customers just ended up confused! Demo after demo, the customers would pick a speaker up front (usually because they came in looking for a particular brand), and their bias held through the blind tests, and they ended up walking out with the speaker they selected before they even heard it!

This debate is as old as Hi-Fi itself...but I don't understand why. Everyone has different tastes, and everyone perceives music differently, so why be surprised that math can't model the "perfect" speaker, or that it can't reveal what the customer will like when making a purchase?

As Albert Einstein has noted...It's all relative!

From Medicine, food, wine or cigars, to yes, speakers, blind tests are the most credible. A speaker's sonic performance should be entirely decoupled from visual judgement. One known bias is for the reviewer's desire to feel or be perceived as credible and and as an expert. No reviewer wants to face the criticism of saying the Sony speakers out-performed the Martin Logan speakers, and reviewers are subconsciously driven toward the "safe" or expected result in a comparison. Human bias and it's reasons vary from person to person for many reasons. But it is undeniable.

I can't think of any reason a true audio face-off comparison between two speakers or speaker systems benefits from the reviewer knowing which product he is listening to. Science absolutely substantiates the viability and credibility of blind comparisons, whenever possible.

LOL I could only picture how fun of a blind test that could be.

If ABXing throws out any samples made by those who are asked to participate it would be a totally flawed and useless test.

ABXing tends to treat humans as if we are machines, but we are not. Each and every one of us has a unique set of characteristics. Too many casual consumers are casual listeners and thus do not bother to care to really listen short of the tunes just being on. But given time to train oneself you will find a more discerning listener and one where preference is not based on measurements but on personal bias, habits and sonic ideals.

Specs/measurements are fun and cool, they help give a base as to the possible quality of a design and/or product, but we do not listen to specs or measurements. We listen to music and it is as being human 100% subjective. Yes, humans have general evolutionary and genetic overlap in how we are made but our subjective ways influence our preferences more than any objective measure could.

All you've done in that test is proven that those five women's lips provide equivalent response to the men kissing them.

How, exactly, do you extrapolate to proving all women are the same?

I still stand by my suggested way to compare multiple components being reviewed/compared in succession.

1: Make sure all levels are matched to be less than 1db apart.

2: Gather 2 or more samples of audio, likely music and of differing varieties. Make sure that no more than 1 minute of each is played at any one time.

3: Have the listener seated properly in hopefully a rather quiet room. It could be a blind test or not as I don't think it makes too much of a difference based on my criteria for this test.

4: A person in charge begins the playback of a song sample. No talking/coaching should be done. Listener should carefully listen and quickly jot notes as reference to what they hear, like, dislike and such during this phase. Just quick 1 to 3 word jotted notes.

5: At 1 minute STOP THE MUSIC!

6: Prepare the second component to be reviewed. (again make sure levels are balanced.)

7: The person in charge begins play using the same song track. Again no talking or coaching.

8: Listener should repeat the listening and note jotting. Again stop after 1 minute.

9: If listener wishes to repeat the comparison, SWITCH TO A DIFFERENT SONG TRACK!

10: Once done compare and then discuss/debate the notes taken during each session to get an idea of what and how that listener hears each component being compared and reviewed.

As per seeing the gear or not. If this induces a bias than so be it. We have TWO EYES and TWO EARS. Looks of gear do have a matter and a impact to general preference. If you made two components that could measure EXACTLY THE SAME even sound as such, if one is prettier or more sexy and higher end looking than the other, the consumer will likely tend to want to buy it as long as the price difference is not outside their budget. Higher end a/v gear generally looks nicer, not for sound quality but for visual pleasure and desire to own. It's not a sin to be biased towards nicer looking gear.

There are so many variables that impact a person's ability to discern distinct differences between speakers or components that such a blind test is exceedingly difficult to perform and be valid. One such factor that I think no one has mentioned is age. As we age, our hearing becomes less acute especially with high frequencies.

Tom Norton in his recent review of the Polk LsiM tower speakers said that the speakers could use more top end "air". Would the younger Stephen Mejias of Stereophile agree? Would an average 18 year old agree with the conclusions of either of the above? In a blind test, would an average person be able to discern whether speaker had more top end "air" or that one speaker sounds "dark". Would the uninitiated even know what top end "air" is or what a "dark" toned speaker sounds like? Likely not. I'm not being critical of Tom's review, in fact, I think reviews by Tom and for that matter Stephen Mejias "The Entry Level" column are consistently well thought out and concisely written. I think it's a matter of rethinking such tests and what the goals should be. How are we benefiting from saying Speaker A sounds different from Speaker B. Without a reference, it seems unmeaningful to me.

In a blind test, what is the average "non professional" listener being asked to do when judging between two different speakers or components? How are they going to describe what they are hearing? Is he or she to use typical audio jargon like that written in Robert Harley's respected "The Complete Guide to High End Audio" when commenting about sound (e.g. - quick, lithe, agile, strident, forward, recessed, bright, uncolored, transparent, muddy, dynamic, mellow, etc.).

In my own opinion, I think the inherent problem with the blind test is comparing Speakers A to Speakers B without a reference. In my opinion, the better and more relevant blind comparison would be for a participant to hear a musician play live in a specific venue or recording studio followed by the listener hearing the same music/sound as reproduced through Speaker A/Speaker B and judge which one sounds more like the reference "live" performance.

I'm curious about what Mike Fremer, Tom Norton, and Mark Fleischmann would have to say about this topic given their experience reviewing.

My experience with blind testing indicates that the people who advocate it as being "scientific" and inherently applicable to sound (as it is to pharmaceutical drug testing) have enormous axes to grind and if the results don't go their way, then WATCH OUT!

What's more, when the results turn out to be really stupid, you have to take a step back and reconsider the advocacy.

To wit: I was challenged to a double blind amplifier test some years ago by a writer for Audio magazine who was in the "all amplifiers that measure the same sound the same" camp. My listening experience tells me such a claim is ludicrous on its face.

So I said to him: "Fine. You set up a double blind test and I'll prove you wrong."

And so he did. At an Audio Engineering Society convention in Los Angeles some years ago.

He set it up using 5 amplifiers. For some reason he included a few amps that definitely don't measure identically, not to mention sound identical. I think he wanted to trip me up somehow.

In any case, it was double blind A/B/X with five amplifiers including a Crown DC 300 and a VTL 300 tube amplifier. These are SONIC POLAR OPPOSITES.

So I took the test. Probably 100 AES members took it too. As did John Atkinson, Stereophile's editor.

At a meeting later that afternoon we got the results:

I got 5 of 5 identifications correct. John Atkinson got 4 of 5 correct. However, in the population of audio engineers, the results were statistically insignificant! In other words they couldn't hear the difference between a Crown DC300 and a VTL 300 tube amp! These two amplifiers both measure and sound extraordinarily different yet these pros could not hear the difference!

Before I get to my conclusion, get this: because my result didn't comport with "the average" I was declared a "lucky coin" and my result was TOSSED!!!!!!!!

Now, what did I learn from this? First, the tossing of my result indicates a sort of Soviet style communism at work behind the "scientific" claim. I didn't fit in with the tester's thesis, therefore I was "tossed."!!!!!!

Whenever I tell this story skeptics say "There weren't enough samples to be a valid test," to which I reply I DIDN'T CREATE THE TEST.

The second takeaway is this: I am experienced at this. I am an experienced listener. It was EASY for me to hear the differences among the amplifiers. It was NOT for the inexperienced. But if you were to use a population of inexperienced listeners--even among recording engineers-- you would get "proof" that ll amplifiers sound the same, even if the ones in the test DO NOT SOUND THE SAME!

The results of this "blind test" prove one thing: blind testing is NOT necessarily useful in audio.

On the other hand I did participate in a similar test at Harman International. It was a loudspeaker test similar to the one that produced a graph at the top of this subject.

They have a room in which is a stage with a pneumatic series of platforms. At the push of a button within seconds a single speaker can be moved front and center (behind a scrim so you couldn't' see it) and played.

Harman wasn't interested in which speaker you liked only in seeing if the tester could consistently identify each of the five speakers. Without going into too much detail about the methodology it was about as scientifically well designed as a test could be. However, the music sounded like CRAP through all of the speakers. It was horrible edgy digital sound.

I kept saying "That speaker isn't accurate, but given how horrible the source material," that's the one I'd prefer. Two of the speakers struck me as the most accurate. I said "those two sound more accurate but the top end is so screechy because of the source material, I couldn't live with them if all i had to listen to was that source."

There was a morning and afternoon session. At the end we got a bar graph showing how consistent were our identifications. The taller the bar, the more consistent were the identifications.

I came in second in the morning. I had a fairly tall bar. The guy who came in first had a taller bar, but everyone else? Tiny, tiny little blips!

Why? Because they "couldn't hear?" or because "all speakers sound the same?" NO!!!!!

These speakers didn't sound at all alike--except for the two Harman speakers that sounded similar and would be more difficult to identify blind.

The other subjects didn't do as well as I did because they are not as experienced or they don't care as much or whatever.

Harman had a pair of electrostatic speakers that look very sexy and physically transparent. INEXPERIENCED listeners would see those and go ga ga. But when the curtain was pulled I'm sure they heard what I heard EITHER WAY. That graph is the result of such INEXPERIENCED LISTENER BIAS.

I've taken blind cable tests too. These are very difficult for a variety of reasons but we don't listen in "tiny snippets" BUT I did get that one correct too even though I didn't know it was a cable test!

A skeptical but curious Wall Street Journal reporter set up a 'test' at the CES a few years ago, inviting people into a room to hear two identical systems set up in the room...modest systems. Small Totem speakers and I forget the amps.

He spent ten minutes talking about MP3s versus CDs and source material...and then said "take the control and switch between to two systems and tell which one you prefer, if you do prefer one over the other. Or maybe you don't hear a difference."

So I went back and forth and quickly said "system one sounds better. It's not as thin and hard as the other. But it doesn't sound like a compressed versus uncompressed audio test, whatever it is."

Turns out to be a CABLE TEST. He used lamp cord on one system and $200 Monster on the other.

John Atkinson also heard it but guess what? THE RESULTS WERE STATISTICALLY INSIGNIFICANT.

So what does that mean? Cables all sound the same? NO!!!! It means that inexperienced listeners have problems under double blind conditions.

The idea that cables don't make a difference is ABSURD... almost as absurd as relying on blind testing for audio to "prove" whatever the tester wishes to prove.

How do you think we arrived at MP3s?????

Take a bit of info away can you hear the difference "no." Okay we're good!

Take a bit more away, can you hear the difference "no". ETC.

Pretty soon you've taken it ALL away and the listeners don't hear a difference. Until you play them the full rez file versus the "slippery slope" version that supposedly is identical to the previous higher resolution A/B.

This "double blind" "trust the measurements" mentality just about ruined audio in the late '60s when awful sounding but well measuring solid state amps were introduced and again when awful sounding but well measuring CD players came out.

Even now, vinyl properly played back KILLS CDs every time. I've proven that to skeptic after skeptic. And it's not "euphonic colorations" either. I know many recording engineers, some very well known and very accomplished who think their masters on vinyl sound more accurate than on CD. Never mind how they measure...the brain is FAR more sophisticated.

My bottom line is: "double blind" testing when it comes to audio has some value but to be led around by it or by measurements is pretty much USELESS and will almost ALWAYS lead to STUPID CONCLUSIONS.

I do my reviewing "blind" in that I don't know how the products will measure but I try to be specific in the reviews in terms of how I think they will measure in-room and I'm pretty good at it, whether the speaker is WOOD or METAL, sexy or plain.

I am sorry to see the poll results showing such support for this abuse of scientific method.

Great post!

Reminds me of a Zen story:

---

The nun Wu Jincang asked the Sixth Patriach Huineng, "I have studied the Mahaparinirvana sutra for many years, yet there are many areas i do not quite understand. Please enlighten me."

The patriach responded, "I am illiterate. Please read out the characters to me and perhaps I will be able to explain the meaning."

Said the nun, "You cannot even recognize the characters. How are you able then to understand the meaning?"

"Truth has nothing to do with words. Truth can be likened to the bright moon in the sky. Words, in this case, can be likened to a finger. The finger can point to the moon’s location. However, the finger is not the moon. To look at the moon, it is necessary to gaze beyond the finger, right?"

---

In the context of hifi. For years I always thought Science (the finger) was pointing at the Moon (hifi).

Then I saw the Moon! It blew me away.

It dawned on me the finger was really pointing at my wallet all along, and how fixated I was on the finger.

Science will fix itself in the future. But in the meantime, at least I'm glad there's a few who don't need directions.

I must confess, the fervor with which those invested in the current hiend status quo seem to wish to discredit blind testing strikes me as inherently self-serving. There have been so many ludicrous products validated through 'subjective listening' over the years, that I do think some balance is required in the form of blind testing.

I have had the personal experience many times 'learning' the sound of a particular component over a longer period of time - something that cannot be done practically with a blind test. So, I do concur that subjective listening ought to be the primary tool in getting the full measure of a componenet - but I don't see why blind testing cannot also be part of the evaluation toolbox.

I have felt for many years that hiend audio has done istself a huge disservice by endorsing products with dubious benefit claims. Whether blind testing would have prevent ed some of this is unclear, but it couldn't have hurt.

To say there is no use for blind testing with audio equipment is simply being closed minded, kind of like those earlier scientists who refused to believe the earth was round. There is a place for scientific methods if properly done. However, blind testing has many limitations in the everyday world because you would need to make sure to test only the variable to be examined and control for everything else; so if you're trying to listen to speakers you need to control for the amp, volume, room acoustics, etc . . . Actually seeing the product inherently leads to bias when rating it (no matter how hard you try to be objective-let’s face it you’re not!). I agree that the average consumer and many enthusiasts may have a hard time distinguishing in a blinded study, but that's because of their lack of training in what to assess. In scientific studies that require an assessor; that assessor needs proper training and validation. Then that trained assessor can properly distinguish between products. The sticky part is how to standardize assessors in the world of audio equipment due to lack of objective measures for listening tests by raters (how do you rate “soundstage” or “fullness” of music?).

I agree that conducting a true blinded test using the average consumer as the rater is impractical and probably not valid when the differences are subtle. I am all for a group of "pros” doing blinded testing of various equipment (if we can figure out the standardization of the assessor aspect) and let’s see the results! I still suspect those with a trained ear can tell the difference between systems of different quality and build. There's a lot at stake for high end audio companies who create expensive products for the consumer (and hopefully want their products to perform and sound better) who would hate to see their product be “comparable” to something that’s much less. But wouldn’t it mean that much more IF there was shown to be a difference with the right test? This could only help a consumer in their decision making for purchasing equipment. I would LOVE to see this kind of testing done to really assess audio performance as opposed to what essentially amounts to someone else's opinion (even if it is expert opinion).

In the end, consumers want to buy something for audio performance in addition to other factors such as brand name, perceived build quality, certifications, and appearance. Furthermore optimal audio performance may vary among consumers, some may prefer a more balanced sound, others may want heavy bass, others want emphasized midrange or treble. So in the real world, whether the consumer is in an AV store or at home auditioning equipment in their house, they should just narrow down their top choices based on factors that are important to them, close their eyes, have someone else do the switching, and listen to see what sounds better to them! Not exactly “scientific” but they are the ones that have to be happy with what they purchase, whatever the reason.

Scott, let me turn this around. Do you agree more with Dr. Sean Olive or Michael Fremer with reference to the value of blind testing vs. sighted testing. Do you believe you can "will away" sight bias or that it just doesn't exist, because "I know what I hear"? Something else?

I feel that blind testing is beneficial because of what I read in Michael Fremer's posts. He indicates that in a blind test he can indeed tell the difference between various implementations of certain types of components, including amplifiers and cables. In the one amplifier test he cites he was correct 5 out of 5 times and John Atkinson was correct 4 out of 5 times while all the other scores were abysmal. He also cites cable blind tests he has aced. What this tells me is that blind testing was beneficial in revealing a difference but only detectable by the very few who have sensitivity to notice. I'm not sure if that is a blessing or a curse. So perhaps the correct assertion is that there is no audible difference among cables and amps for the vast majority of listeners. Of course we are assuming we aren't throwing junk components from K-Mart into the mix. For people like me the difference is not audible, in particular for cables, whether Monster, Audioquest or Monoprice, even in a non-blind test. I sat in on a Nordost interconnect cable demonstration at the Toronto show (TAVES) last fall (great show BTW) where the host started with the cheap generic pair and moved up the cost line of Nordost cables. I tried real hard to hear a difference, but I couldn't, even though it was a sighted test. I am more sensitive when it comes to comparing speakers, especially how well they “disappear”. So for me personally, applying the law of diminishing returns, I skew my purchasing decisions away from cables and more toward other components, especially speakers, where I can tell the difference. All that said, I'd hate to go shopping and making my decisions via blind testing. That would definitely not be beneficial.

I think ABX is great if you need a null result. Like when the music industry wanted to push mp3 into the marked as sounding “indistinguishable from CD”.

From my experience, I tend to agree with Steve Guttenberg's statement saying that differences diminish in blind tests. Our brain always filters, never perceives the whole content at a time. We need to study how that filtering process works - not an easy thing for an engineer! My observation is we discard subtle differences in a blind test, eliminated as non-significant information by our brain. I would suggest a "guided" test as opposed to a simple preference test, to force the brain to concentrate on particular attributes. Then it might become less relevant whether the test is sighted or blind!

The ad-hoc experiences reported in this discussion seem to confirm my observation. The first impression, while not knowing there is a test going on, may reveal the truth!

As an engineer, I don't believe in cables changing the signal. It's our perception (brain information filter) that changes and creates the illusion of hearing differences. Curiously looking forward to Michael Fremer's demo, though!

I learned while working in a stereo shop with a display behind a curtain, that with identical speakers in front and behind, listeners judged the hidden speaker as significantly better. My conclusion is that the mind tells you that the sound is coming out of a box when you see the box. With a curtain trimmed to look like a stage, the mind imagines musicians behind the curtain and makes supportive instead of disputing assumptions. I did the same comparison at home by moving the curtain just to confirm that speakers position was not the controlling factor. I couldn't tell the difference with my eyes closed, but I found it more pleasurable with the curtain with eyes open. Yes, it's self deception, but isn't that the point, to make it feel like being there by any means available?

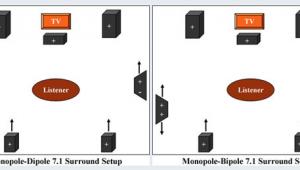

Regarding speaker position, small changes can cause audible differences. One manufacturer (sorry I don't have the name handy) built a pneumatic rotating platform to move each speaker under test into the same position to eliminate this difference and the directional cure that gives away which speaker is playing behind the curtain. Since two speakers cannot occupy the same space, are all other tests tainted?